Testing of UIS

Because the UIS should serve as a testbed, it is extremely important to have the UIS defect-free. This was achieved by thorough testing, which was done continuously during the whole development.

There are three types of tests which were performed:

- JUnit tests done by the developer UIS;

- Front-end based functional tests provided by the project leader;

- Front-end based acceptance tests provided by another developer.

Each of three types of tests will be characterized by the same type of a table describing an extend of the used source code.

There will be information about the scope of the UIS application in the appart part of the table (for each type of tests), so that the difficulty / scope of each test is comparable.

Columns description:

- Files—a number of files (mainly Java, in the case of UIS JSP too)

- Size—a size of all mentioned files in KBytes

- Lines total—a number of all lines (including empty lines, comments, etc.)

- Lines of code—a number of all lines with code only

1. JUnit tests

Unit tests were implemented in the JUnit framework. The tests were created simultaneously with the development of the UIS application and are being updated with development of new versions of UIS.

Rows description:

- Application UIS is composed of two different types of source code:

- Java

- JSP

- JUnit UIS: test individual methods of the system and the basic sequences of methods calls on the technical level

| Files | Files' size | Lines total | Lines of code | |

|---|---|---|---|---|

| Application UIS | ||||

| Java | 95 | 391 | 9583 | 5190 |

| JSP | 22 | 144 | 2910 |

2810

|

| sum | 117 | 535 | 12493 | 8000 |

| Tests | ||||

| JUnit UIS | 33 | 283 | 6978 | 4690 |

| ratio tests / UIS | 0.28 | 0.53 | 0.56 | 0.59 |

The line coverage of the available unit tests is greater than 85%.

2. Functional tests

FE-based functional tests, which simulate users’ tests accessing the system UI. These tests are written in Java with the Selenium Web Driver API, currently version 3.141.59. The tests are structured using the PageObject pattern, which significantly decreases their maintenance and allows future extensions of the test set, as independently verified. The Framework JUnit5 was used for its good ability to organize, maintain and evaluate tests.

Note: Despite using JUnit, the functional tests are not unit tests. They use black box testing approach.

Rows description:

- Application UIS see above

- Functional support: supporting classes packed into a library for comfortable using of Selenium framework on several different levels, enhancing testing, configuration, logging and test oracle which provides expected results for tests

- JUnit functional: unit tests of the Functional support library

- Functional tests: tests based on the Functional support library

| Files | Files' size | Lines total | Lines of code | |

|---|---|---|---|---|

| Application UIS | ||||

| Java | 95 | 391 | 9583 | 5190 |

| JSP | 22 | 144 | 2910 |

2810

|

| sum | 117 | 535 | 12493 | 8000 |

| Tests | ||||

| JUnit functional | 33 | 113 | 3008 | 2622 |

| Functional support | 95 | 507 | 16206 | 8314 |

| Functional tests | 125 | 363 | 11300 | 9054 |

| sum | 253 | 983 | 30503 | 19988 |

| ratio tests / UIS | 2.16 | 1.84 | 2.44 | 2.50 |

2.1. Two parts of test

Functional testing is composed of two parts. We use the concept of diligent separation of the first part controlling the web application with all supporting funcions to an independently usable part named Functional support. One of its important subparts is formed by the Oracle module, which represents paralell business logic, and which is convenient to use during tests for stipulation of expected results. The Functional support uses above mentioned design pattern PageObject and JUnit5 Framework for organization of tests.

During the development of this part, JUnit tests were created simultaneously to validate its functionality. This way we developed a robust, well tested library, which is then used not only in the described functional tests, but also in a completely different type of of acceptance testing - see below.

The library is used in current experiments with UIS and can be used by anybody else, who will use the UIS application for his or her own research.

The second part is formed by the funcional tests themselves. The writing of source codes of the tests was preceded by a dilligent systematic analysis, which was finalized with a preparation of separate test suites and test cases. The base for the analysis was formed by detailed descriptions of use cases. The use cases were described by sets of requirements. It can be demonstrated that the requirements cover the whole UIS application.

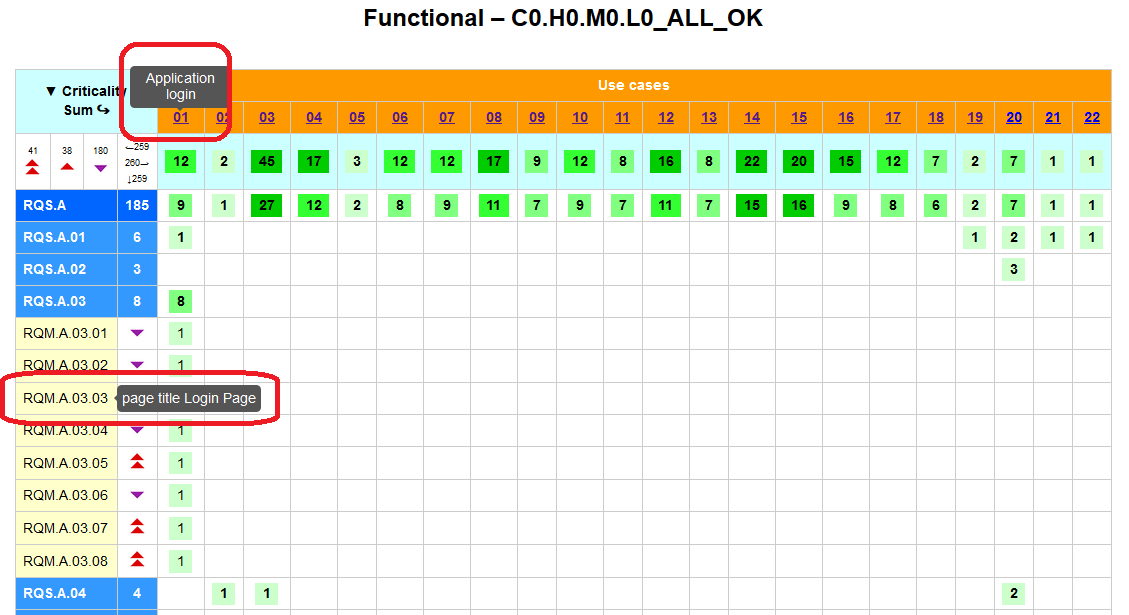

Use cases covered by requirements

Subsequently, the requirements were covered by test suites and test cases.

2.2. Usage of the test management system SquashTM

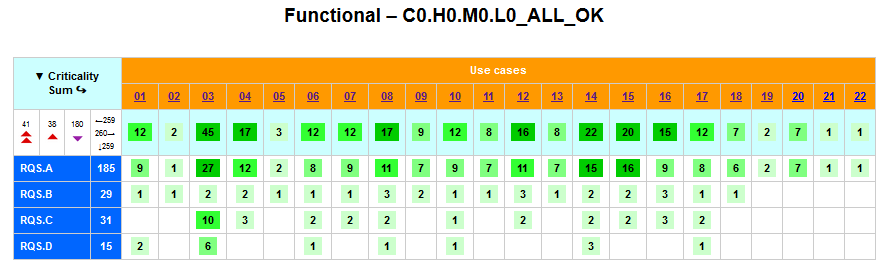

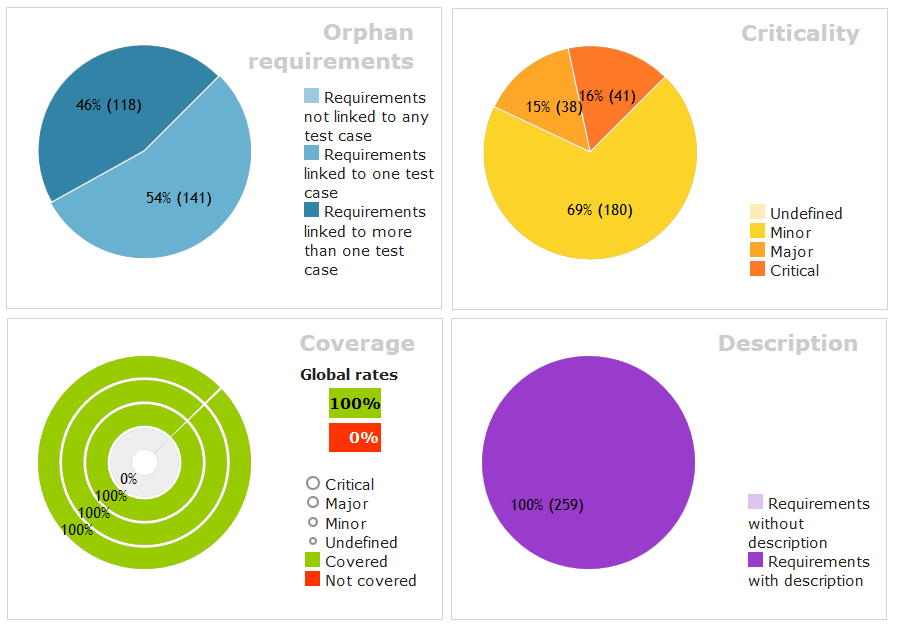

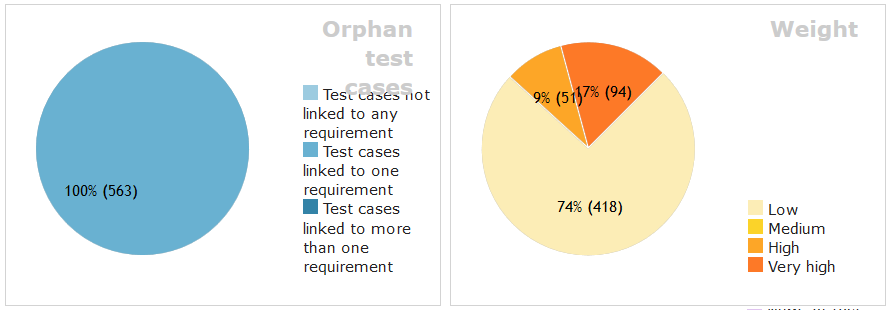

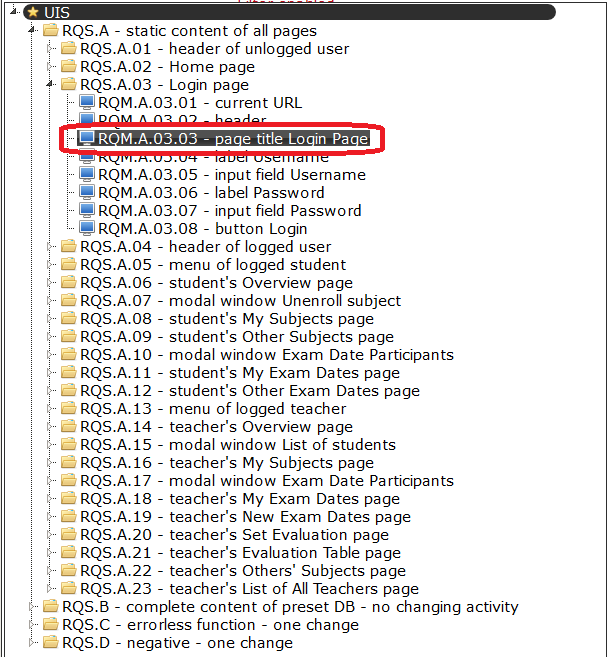

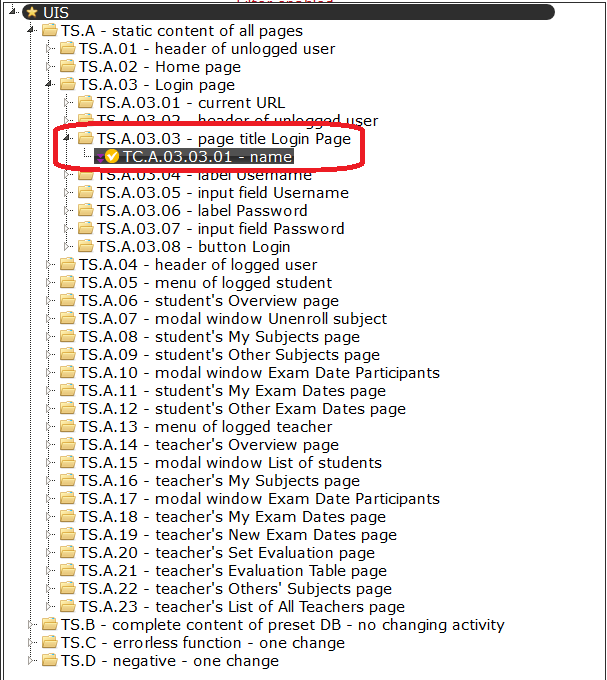

We used test management system SquashTM version 1.21.0 for organization of the requirements (RQM) and the test cases (TC). 259 requirements (detailed description) and 563 back-traceable test cases (detailed description) were created. The full requirements and test cases importable to Squash can be downloaded in section Download.

RQMs and TCs can be imported to SquashTM with utility Squash-plugin-CLI.jar via the command

java -jar Squash-plugin-CLI.jar -p UIS -r rqm-uis.json -t tc-uis.json

Squash allows us to get a number of overviews and aggregated graphs

Global characteristic of requirements

Global characteristic of test cases

As the last step, we programmed the funcional tests themselves. Thanks to the previous analysis, the source codes of the test are very well structured and their names correspond to the names of the test cases (see below). It is therefore easy to get detailed information about the purpose and setting of each test.

Global hierarchical structure of tests

2.3. Types of tests

Set of functional tests can be divided into the following types:

- Type A—static content of all pages—tests to pass

- verify the correct display of all web pages of the application

- check the types of web elements, their descriptions, tooltips, URL of pages, modal windows, etc.

- cover 100% of elements

- warrant that the web pages have the expected hierarchy and display all expected elements with expected properties

- Type B—the complete initial DB content—tests to pass

- Note: the UIS application is preset with a carefully selected set of values, which cover as many combinations of UIS possibilities

- cover 100% of settings combinations on all pages and modal windows

- are passive, meaning that they do not cause a change in the status of the UIS application

- warrant that the web pages correctly display the content of the database

- Type C—errorless functionality—tests to pass

- check single active actions, which change the status of the UIS, e.g. enrollment in a subject

- check that an isolated action on the side of the student is correctly accounted for at all pages of the respective student and teacher and recorded in database, and checks the same with actions of the teacher

- check also all known boundary induced a reaction on the edge (e.g. teacher cancelled his/her only one taught subject)

- cover 100% of the actions

- warrant that all activities that change the status of the UIS work correctly for respective student and teacher (Note: this is the difference between Type C tests and Type B tests, which check all combinations of all students and teachers at once)

- Type D—negative—tests to fail

- check all user inputs and application constrains set in specifications

- because of very restricted possibilities of input user’s data, there is a limited (but exhausting) amount of these tests in the testing set

- cover 100% of user inputs and application constrains

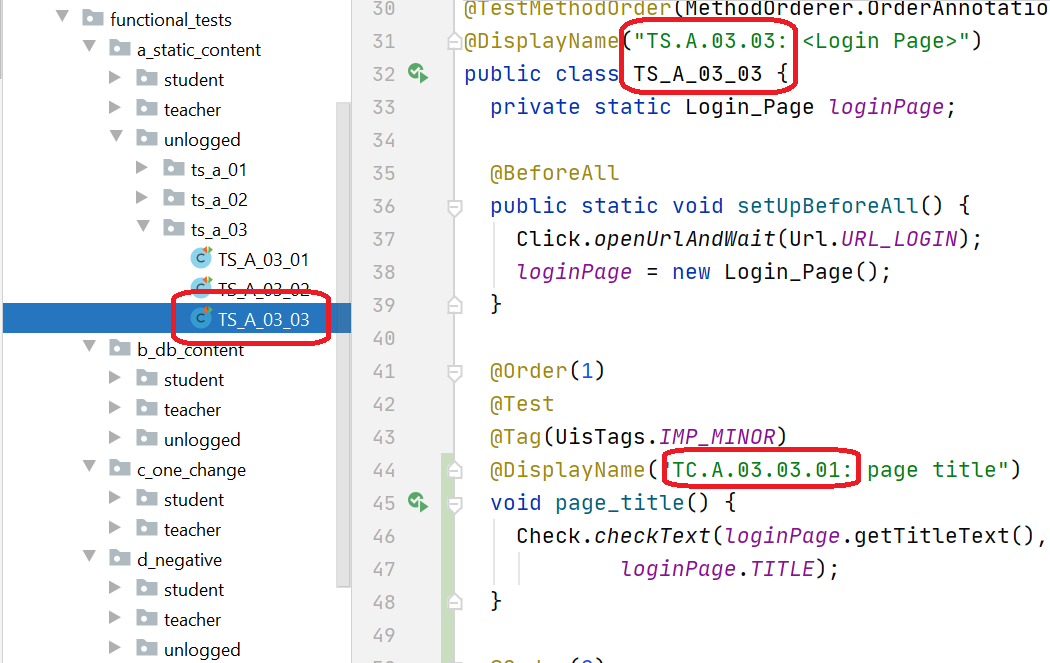

2.4. Tracebility from use cases to test source code

Tracebility can be checked as shown on the test of the title of the Login page

Login page is described in use case UC.01. This use case is covered by several requirements:

Specifically, the use case is covered by requirements from set RQS.A.03. The test of Title of Login page is described in RQM.A.03.03.

RQM.A.03.03 is covered by test cases from test suite TS.A.03.03, which contains only TC.A.03.03.01.

Source code of the test is stored in class TS_A_03_03 and in a test named TC.A.03.03.01.

Forward and backward tracing can be easily conducted this way.

2.5. Tags

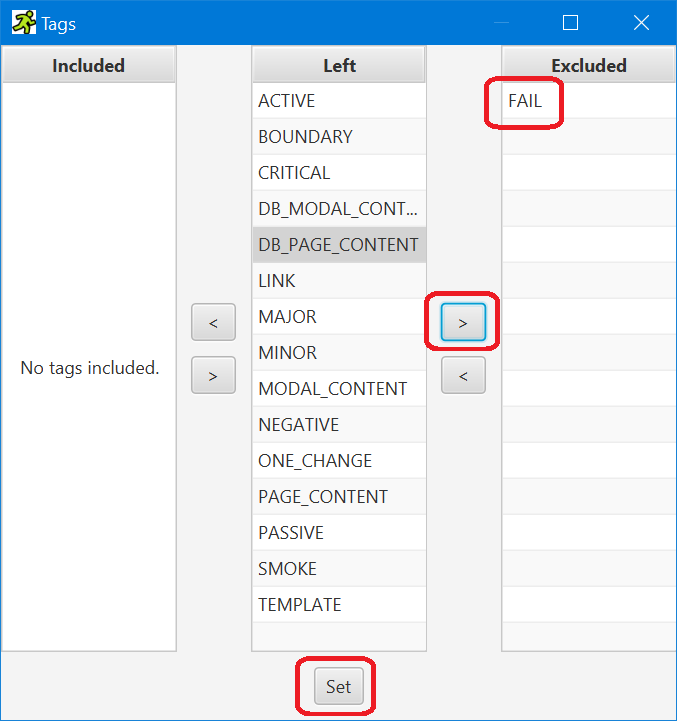

Apart from the division of functional tests in the above mentioned four basic groups, test are also marked with tags, which enable their selective running (see below).

Tags can be divided in two basic groups:

- tags that mark the type of the test

- PASSIVE—Type A and Type B tests

- ACTIVE—Type C and Type D tests

- ONE_CHANGE—Type C tests

- NEGATIVE—Type D tests

- PAGE_CONTENT—Type A tests for web pages

- MODAL_CONTENT—Type A tests for modal windows

- DB_PAGE_CONTENT—Type B tests for web pages

- DB_MODAL_CONTENT—Type B tests for modal windows (time consuming)

- LINK—Type A and Type B tests for all existing links

- TEMPLATE—Type A tests for reocurring elements on webpages (typically header and menus)

- BOUNDARY—Type C and Type D tests for boundary conditions

- SMOKE—selected set of Type A tests, including also TEMPLATE tests

- FAIL—test that fails—for debugging purposes and demonstrative failure

- tags that mark the importance of the test based on the importance of RQM and TC

- CRITICAL

- MAJOR

- MINOR

2.6. Running of the tests

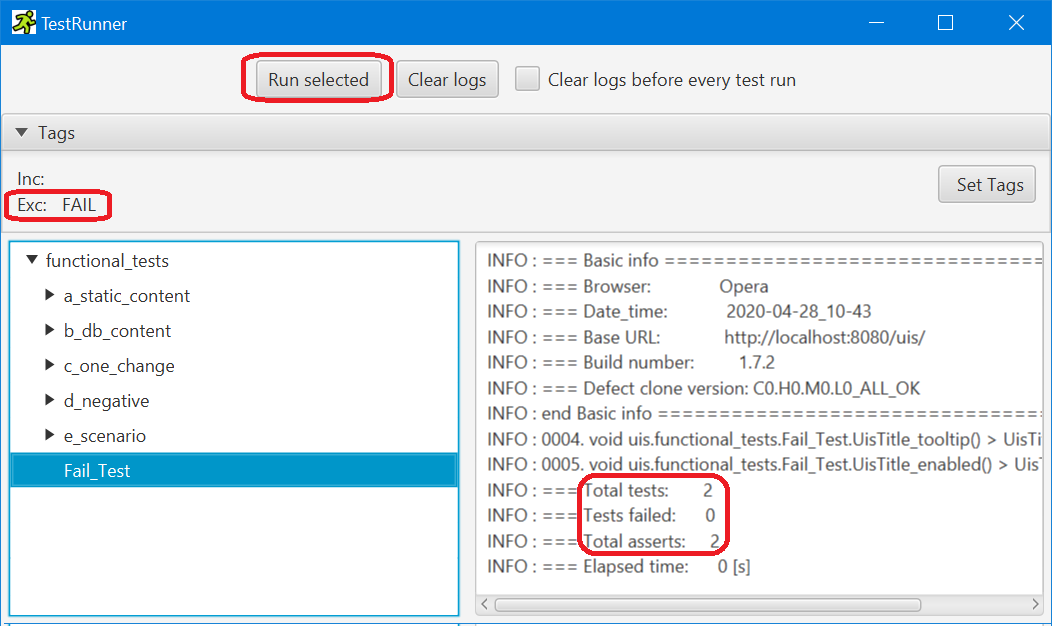

Functional tests can be run with the GUI TestRunner application.

A user can easily set which tests or set of tests will be run, and combine this with tags included and excluded. The application is intuitive.

The first example shows the basic running of all tests from group Fail_Test, which contains three tests, two of which pass and one of which fails, which can be seen in the log of the test.

The second example shows the setting of FAIL tag as excluded, ie. tests with this tag will not be run. The log of the test shows that only two tests have run, both of which pass.

|

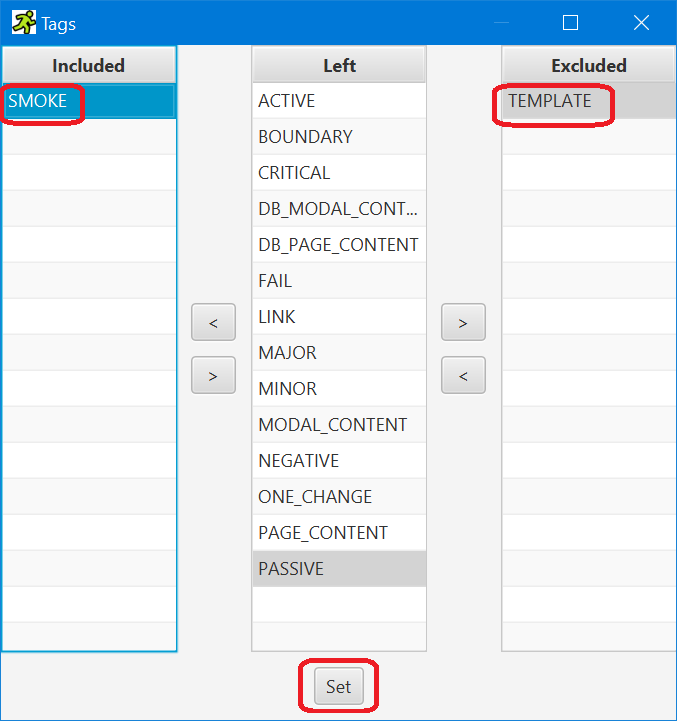

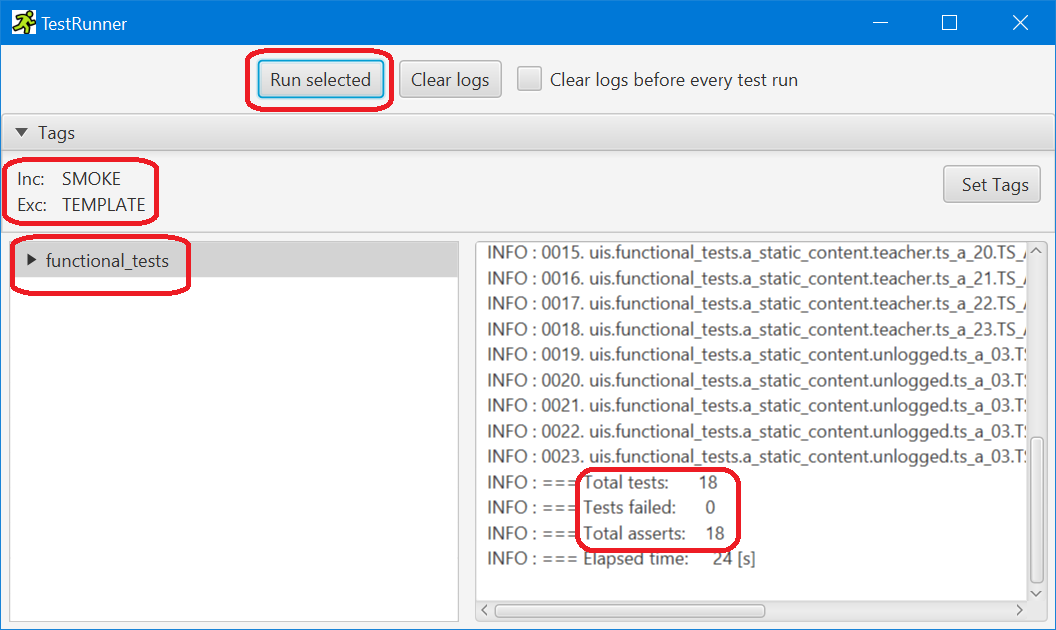

|

The third example shows the setting of SMOKE tag as included, ie. only tests with this tag will be run. Moreover, the TEMPLATE tag is set as excluded, so tests with this tag will be excluded from the previous group. The program is run over the whole set of functional tests.

|

|

The whole course of the running of the test is logged in detail. The logs can be seen on the screen and are saved in logs/test-results-log.txt file.

2.7. Processing of results for back tracing

The above mentioned test-results-log.txt can be transformed into Squash-TC-results.json file, the format of which is:

{

"campaign": "C0.H0.M0.L1_S_S_02",

"iteration": "2020-04-28_08-33",

"results": [

{

"id": "TC.A.01.01.01",

"result": "success"

},

{

"id": "TC.A.01.01.02",

"result": "failure"

},

...

This file can be imported to SquahTM with Squash-plugin-CLI.jar utility with the command

java -jar Squash-plugin-CLI.jar -p UIS -c Squash-TC-results.json

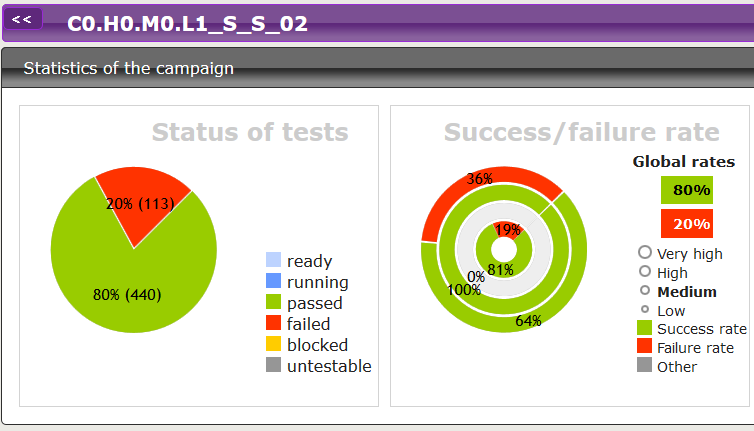

This import creates a new campaign and iteration in SquashTM.

Moreover, the test results in test-results-log.txt file can be shown in overview of coverage of use cases.

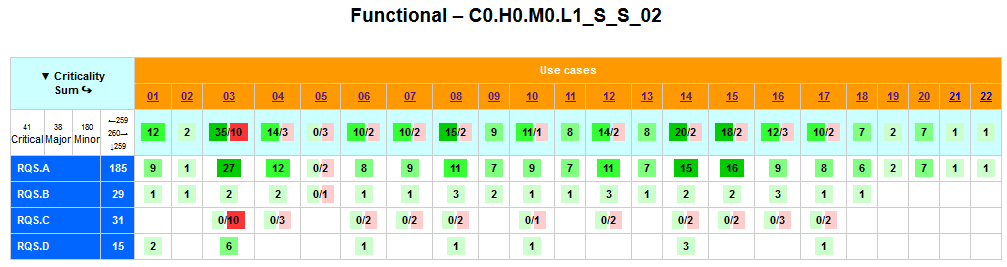

Note: The example shows results of tests of defect clone C0.H0.M0.L1_S_S_02, to show also the results of failed tests.

Of course, this overview shows the agregated results of the tests.

2.8. Number of functional tests

All together it is possible to run 1245 tests. But there could be several asserts (e.g. comparison expected versus actual values) in one test. So more precise value is number of asserts. This is obvious mainly in the case of Type B or Type C tests below.

| Type | number of tests | number of asserts | elapsed time [sec] |

|---|---|---|---|

| A | 862 | 862 | 206 |

| B | 224 | 2251 | 732 |

| C | 108 | 4225 | 2445 |

| D | 51 | 155 | 186 |

| sum | 1245 | 7493 | 3569 |

2.9. Tests of all defect clones

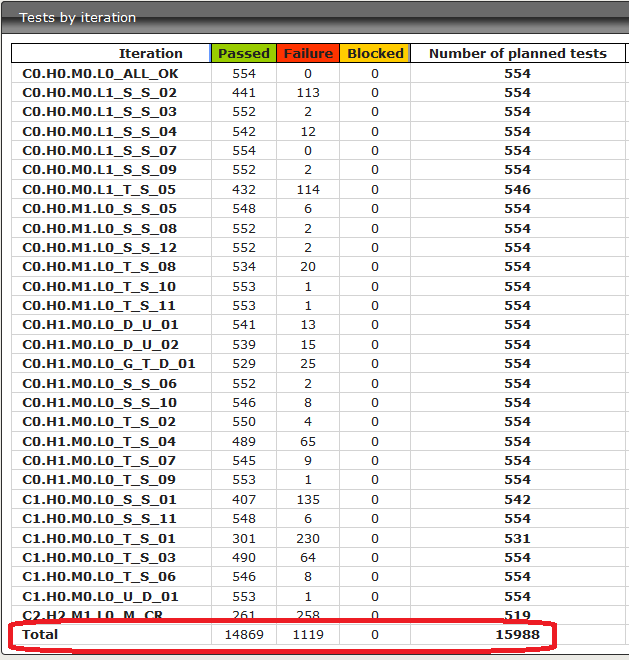

Functional tests were run on a whole set of defect clones and results were collected into SquashTM.

Note: When you compare number of fuctional test above (1245) and number of test recorded in SquashTM (554) you can see an important difference. This is not a reduction but tests are agregated in SquashTM.

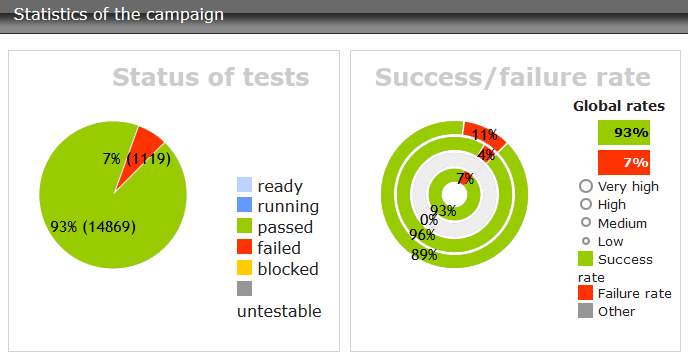

Overview of complete results

Statistic of complete results

3. Acceptance tests

Acceptance test which simulates user actions in UI using Functional support module. These tests are written in Robot Framework (version 3.2). These tests use public operations of Functional support module, which encapsulates usage of PageObject pattern. The Robot Framework was used for its features connected with behavior driven testing and possibility to write test scenarios almost in natural langue.

Rows description:

- Application UIS see above

- Functional support: supporting classes packed into a library for comfortable using of Selenium framework on several different levels, enhancing testing, configuration, logging and test oracle which provides expected results for tests

- Functional support - wrappers: automaticaly generated classes which wrapps Functional support public operations and make them accessible from Robot Framework Environment

- Keywords support: prepared keywords in Robot Framework, which encapsulates Functional support operations and contains composite operations

- Test cases: instances of test cases and its test data rows

| Files | Files' size | Lines total | Lines of code | |

|---|---|---|---|---|

| Application UIS | ||||

| Java | 95 | 391 | 9583 | 5190 |

| JSP | 22 | 144 | 2910 |

2810

|

| sum | 117 | 535 | 12493 | 8000 |

| Tests | ||||

| Functional support | 95 | 507 | 16206 | 8314 |

| Functional support - wrappers | 30 | 39 | 1181 | 575 |

| Keywords support | 54 | 75 | * | 1855 |

| Test cases | 68 | 67 | * | 2686 |

* Tool used for statistics can't analyze Robot Framework scripts

3.1 Three parts of test

Acceptance testing is composed of three parts. We use the concept of diligent separation. The first part controlling the web application with all supporting funcions to an independently usable part named Functional support. One of its important subparts is formed by the oracle module, which represents paralell business logic, and which is convenient to use during tests for stipulation of expected results. The Functional support uses above mentioned design pattern PageObject and JUnit5 Framework for organization of tests.

Second part of test are Functional support wrappers. These wrappers encapsulate public operations of Functional support and make them accessible by Robot Framework environment. Creation of wrappers is automated process. Wrappers are created by custom utility WrapperGenerator. The utility WrapperGenerator is part of acceptance test project.

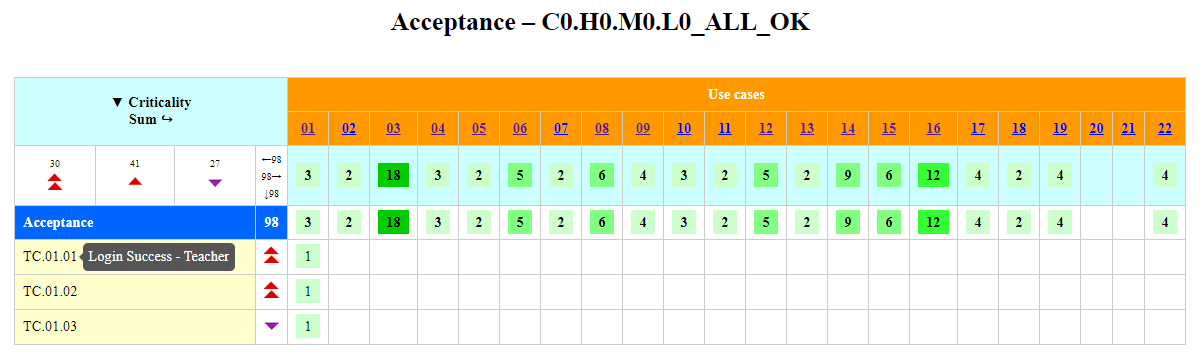

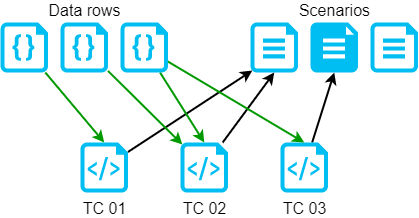

Third part of tests is a set of Robot Framework keywords, test cases and test data. The solution contains 122 acceptance test cases and each test case have at least two different data setups. Each keyword use template of scenario. These scenarions are based on use case specification of UIS.

Use cases covered by testcases

3.2 Behavior driven development pattern

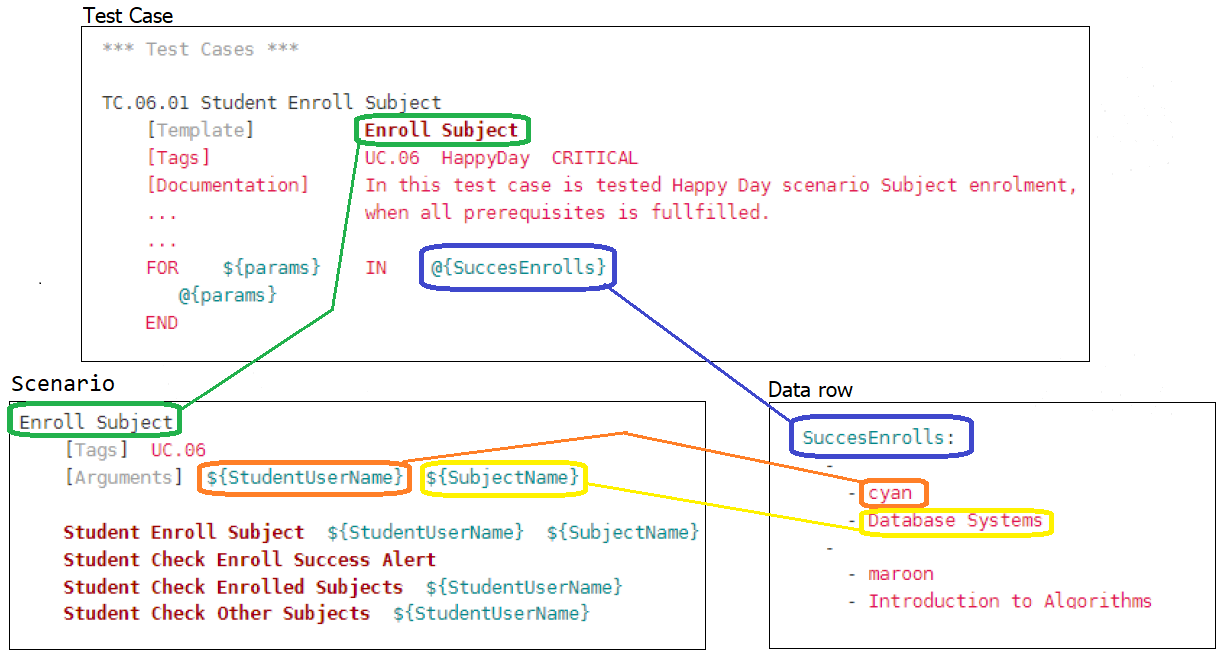

All test cases were developed using behavior driven development (BDD) pattern, which implies separation of data rows (specific data sets) scenarios and test cases. This pattern also implies separation test scenarios and technology representation of application. Technological separation is provided by Functional support. Test developer can use all public functions of Functional support and can prepare scenarios without knowledge of accessing specific elements in application.

Relation between test cases and data rows and relation between test cases and scenarios

Every test case have defined one scenario and at least one data row. Data row contains specific data combinations for one test case. In case of public sequences (e.g. all teachers in system) data row can be shared (reused). Scenario is predefined sequence of steps to fulfil goal of test

Code examples of data row, test case and scenario for subject enrolment

3.2 Types of scenarios

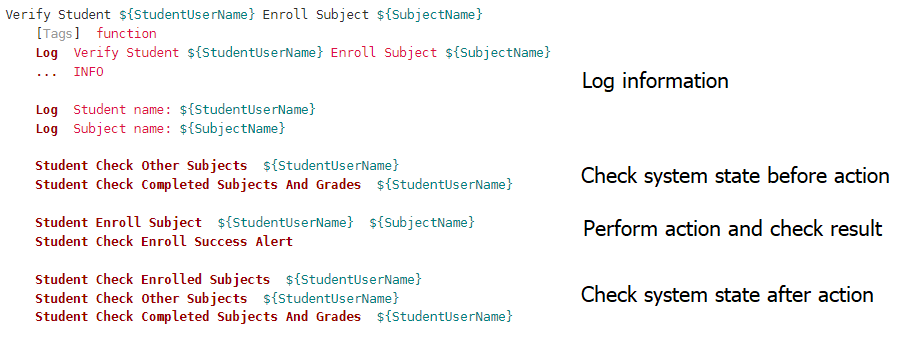

There are two types of scenarios in project of acceptance tests.

- First type is closely connected with application use cases. Most of this scenarios contains only few steps to check one specific action (e.g. enroll subject).

- Second type of scenarios test application is written in more sophisticated way. There is only 23 test cases based on second type scenarios of total count 122 test cases. Second type of scenarios contains more actions an use special verified keywors. Result of special verified keyword is one performed action, which is verified as much as possible.